Introduction:

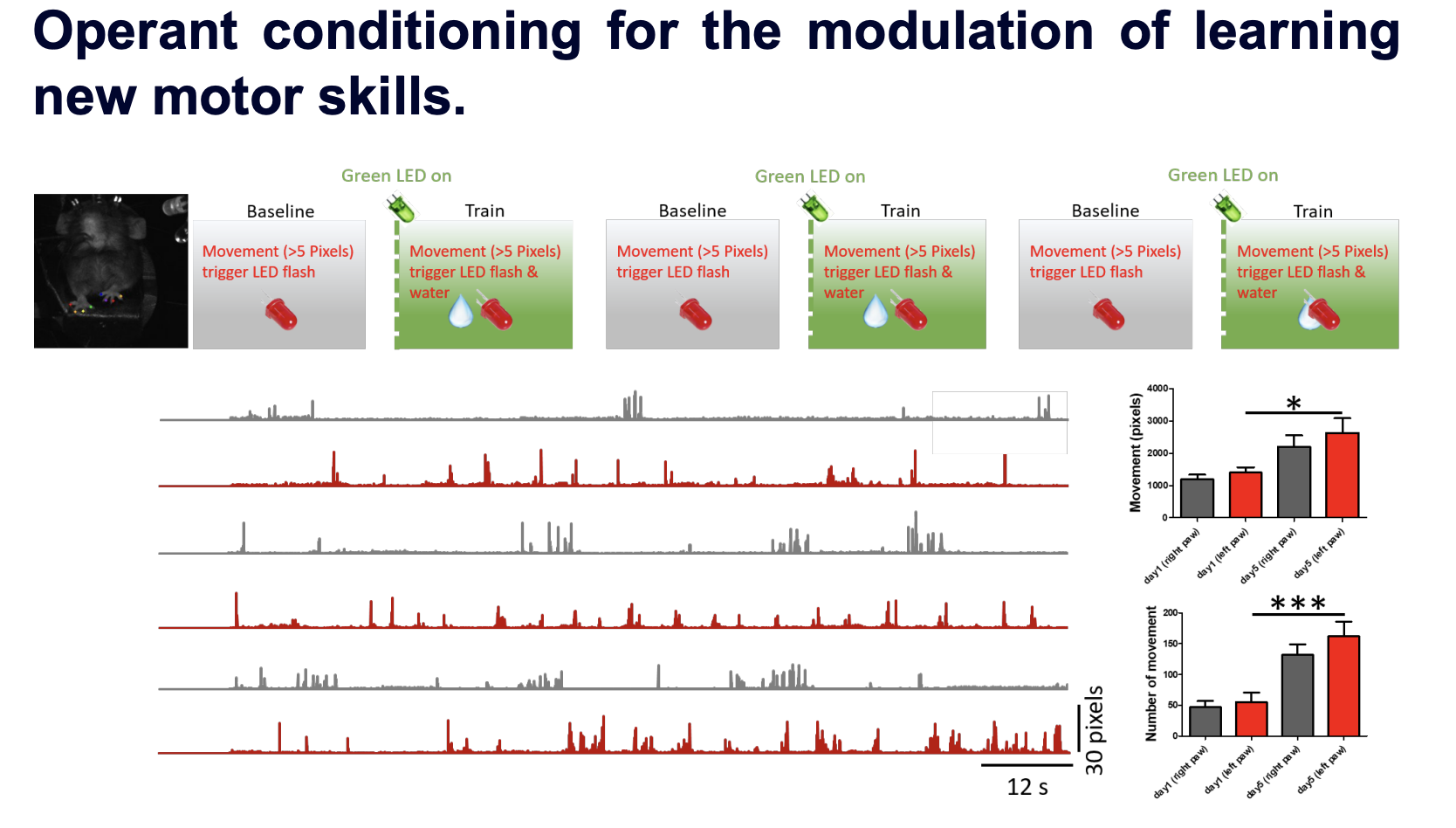

Operant conditioning-based closed-loop neuromodulation for regulation of brain activity has shown promise in producing positive behavioral changes associated with functional and structural connectivity. An important question is whether and how augmented sensory feedback can be designed to improve the learning of motor skills. We hypothesize that auditory information coded by learners’ actions can enhance learning of a complex motor skills. The ability to track and categorize the movement of specific body parts in real-time opens the possibility of manipulating motor feedback, allowing detailed explorations of the neural basis for behavioral control.

Method:

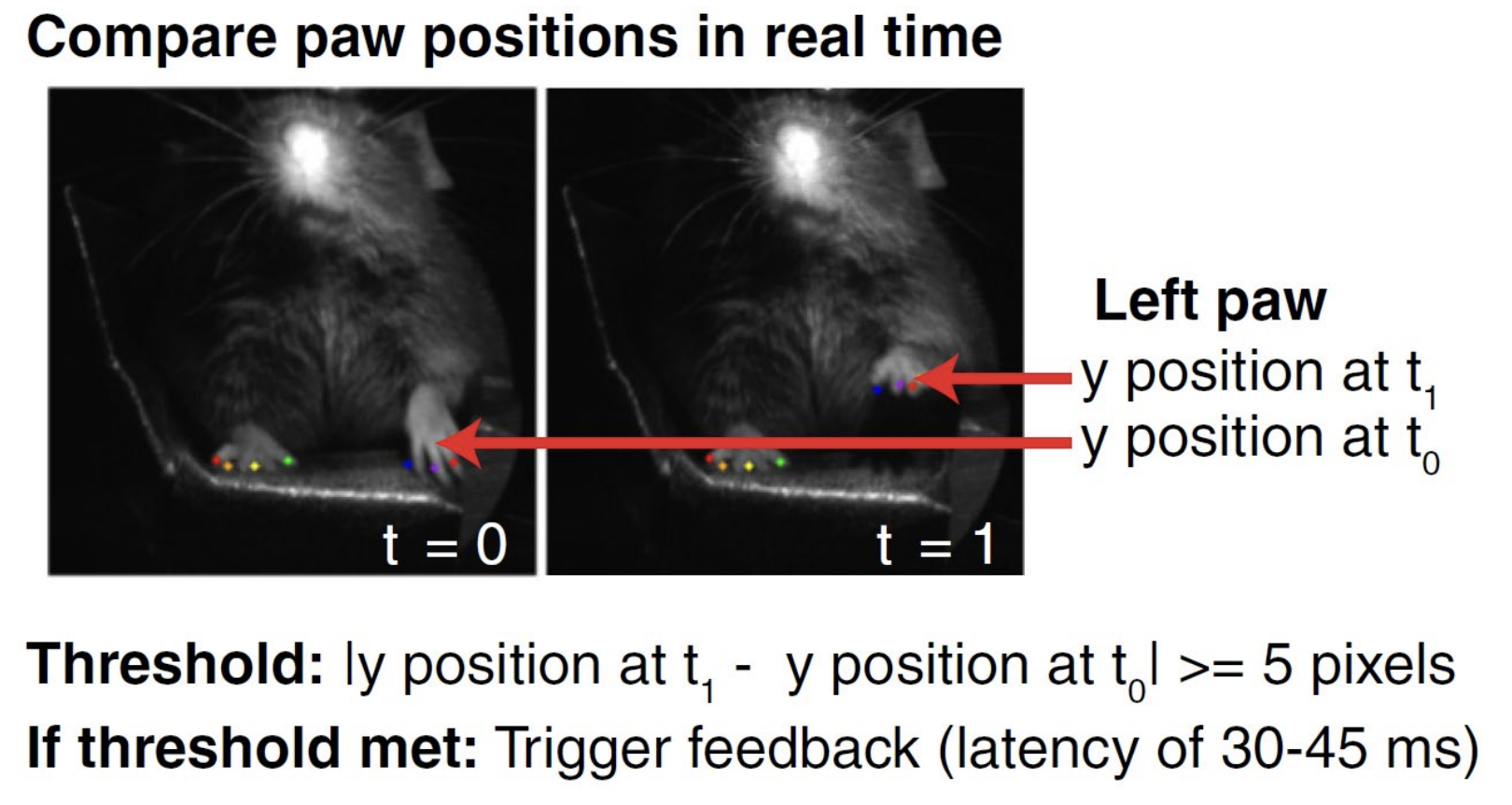

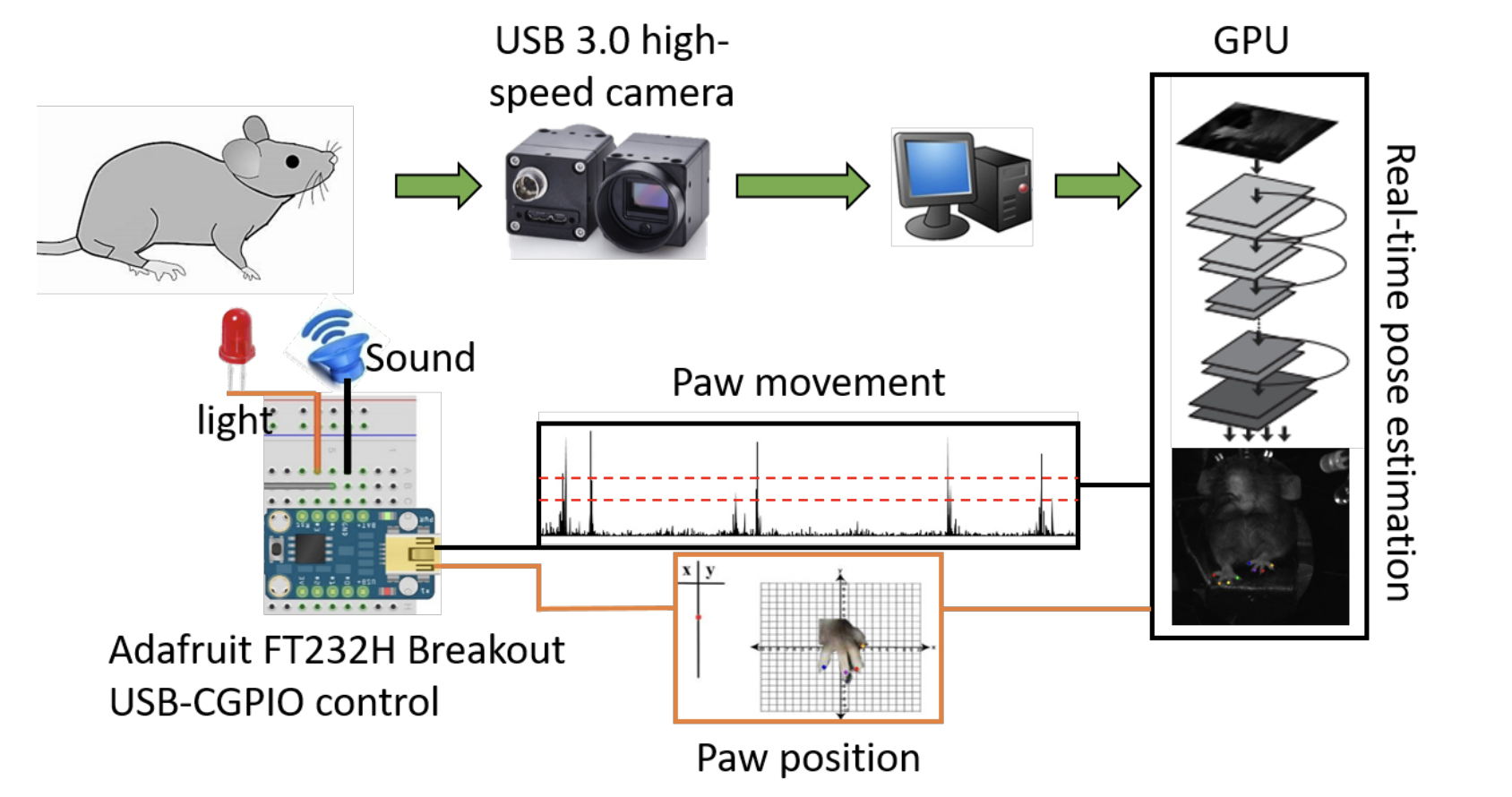

We developed a software and hardware system built on DeepLabCut—a robust movement-tracking deep neural network framework—which enables real-time estimation of paw and digit movements of mice at high frame rates and with a high level of accuracy. Using this approach, we demonstrate movement-generated feedback is feasible by triggering visual or auditory stimulation when a user-defined body part movement selectively exceeds a pre-set threshold.

Discussion & Conclusion:

With the help of advanced learning algorithms and movement-coded auditory feedback, our closed-loop feedback system is effective in improving motor skill learning in mice and helps us explore previously underappreciated avenues of brain disorder therapeutics.